Are we getting Cloud Deployments wrong and are Unikernels the Solution?

Improving Sustainability, Cost and Performance

For this edition of the newsletter I have asked Felipe Huici from Unikraft to write a blog about the emerging space of optimizing cloud deployment with unikernels, which is an interesting area to explore when improving sustainability, cost and performance of cloud applications. I personally do not have experiences yet with unikernels, but are interested in how it evolves.

Disclaimer: No financial compensation has been received for this collaboration. The views and opinions expressed in this post are those of the guest author. The post is based on the questions I have asked Felipe. Thanks to Felipe for explaining the topic in such a great way.

The cloud powers a huge range of business reliably and scalably every day, but is everything running as it should under the hood?

Seconds-long cold boots, terrible auto-scale times, minutes-long waits for compute nodes to be up: these are standard headaches that cloud engineers have to deal with, and work-around, on a daily basis. Not to mention the use of over bloated images: for example, the binary for NGINX is just a couple of MBs, but when deploying it it can become a 1GB monster requiring 100s of MBs of memory to actually run.

The result is that yes, the cloud works, but we’re severely over-utilizing servers and resources to run our workloads, resulting in a number of important detrimental effects:

Complexity: having to figure out work-arounds for cold boots or slow autoscale complicates deployments, makes them more brittle, and takes time away from teams that could be better spent on other features.

Cost: Having to use 10x the number of pods/servers to run a service or workload clearly isn’t good for the bottom line.

Sustainability: the age-old method of just throwing hardware (or instances) at the problem isn’t great for the environmental challenges we’re facing

Much of this stems from the fact that cloud infrastructure relies on technology that was never intended for the cloud: general purpose OSes (e.g., Linux, FreeBSD, etc.) and mainstream distributions (e.eg., Debian, Ubuntu, etc.) are just that: general purpose. For cloud deployments we know what we’re going to deploy before we deploy, so what we’d like is some magic technology and tool that could automatically build, for each app, a fully customized image, all the way down to the OS, without forcing applications to be modified.

That magic tech and tool now exists! Unikraft (www.unikraft.org), a Linux Foundation OSS project) is a fully modular operating system and unikernel build kit. In the rest of this article we’ll cover what a unikernel is, what (massive) benefits they and Unikraft can provide, and how these compare to other technologies like containers. Time to take a great (cloud) leap forward!

What is a Unikernel and What are its Benefits?

Simply put, a unikernel is a specialized virtual machine. Specialized in the sense that the VM image should contain only the code needed for the application to run, and nothing more; this includes operating system primitives such as memory allocators, schedulers and network. To achieve that the underlying OS that a unikernetl is built from should ideally be fully modular, so that it’s relatively easy to pick and choose the functionality that each application needs (Unikraft is fully modular; Linux is a monolithic OS).

Another typical property of a unikernel is that it has a single memory address space, that is, there is no user-space/kernel-space separation (this isn’t a strict requirement for a unikernel and this property can be relaxed, unikernels can have multiple address spaces): since a unikernel is a VM, the (hardware) isolation is provided by the hypervisor underneath (e.g., Xen or KVM). This means that unikernels don’t have context switch overheads and so are more efficient, especially when carrying out tasks such as I/O.

In all, if all we want to do is run a 5MB NGINX application in the cloud it makes little sense to be deploying 1GB images consuming huge resources and containing substantial, unneeded lines of code that could lead to vulnerabilities. While the reason behind this status quo is understandable (Linux just works!), unikernels are a much more logical way forward for cloud deployments. To make this more tangible (and enticing), here a few properties and numbers for Unikraft:

⚡️ Blazingly fast boot/suspend/resume times, in milliseconds

🔥 High performance, up to 2x the throughput of Linux

⏬Low memory consumption, a few MBs for off-the-shelf apps

🗜️ Tiny images, only a few MBs in size

🐇 Low latency

🔐 Minimal TCB: if the app doesn’t need it, it doesn’t get deployed

💪 POSIX compliant: no need to modify your app!

But Building and Using a Unikernel Sounds Complicated…

It certainly used to be, and it’s unsurprising that unikernel projects are attractive to OS developers and low level hackers. Having said that, there is no fundamental reason why unikernels should be complicated to use – if they are, that’s the result of a particular unikernel project or implementation.

At Unikraft, we have spent a lot of effort (and years) making it so that Unikraft is seamless to use so that you can just concentrate on the application you’d like to deploy. How simple is it? First, run this single command to install kraft, the Unikraft native tool (for Linux, though Mac OS and Windows/WSL are also supported):

Then, to test the installation worked and to build and run your very first unikernel run:

That’s it! For running more advanced apps/langs (eg, NGINX, Redis, SQLite, Python, Go, C++, etc), you can head on over to https://unikraft.org/docs/cli . Oh, and Unikraft also comes with Docker and Kubernetes integrations .Finally, if you’d like to taste the power of a unikernel-powered cloud platform check out https://kraft.cloud/.

Unikraft is not Linux, what do I Lose if I Use it?

Unikraft mimics the Linux API so that applications and languages can run, unmodified, on Unikraft. But under that API Unikraft is certainly not Linux (for one thing, it’s fully modular, as explained above)...would adopting Unikraft mean that we’d have to sacrifice functionality?

One common concern sometimes leveled at unikernels is that debugging them is some sort of mysterious black art only accessible to the chosen few. While it is true that in the past debugging some unikernels was complex and sometimes as cumbersome as having to introduce printf() statements in code, that was just a result of those unikernel implementations, not anything fundamental about unikernels. Unikraft comes with a full gdb server, tracing and other debugging facilities so that debugging in Unikraft is no different than doing so on a Linux environment (see here).

In terms of observability, Unikraft comes with (an optionally-enabled) Prometheus library through which a Unikraft unikernel can export stats to Grafana boards. By default, Unikraft doesn’t come with an ssh server (arguably most deployments don’t need it and having it opens a vector for attack) but does come with facilities for dynamically configuring a running unikernel.

Finally, Unikraft’s explicit target to comply with the Linux API means that applications and languages think they’re running on Linux, and so require no particular modifications to run on Unikraft. The only caveat are processes and their related system calls (e.g., fork()), given that unikernels tend not to have multiple address spaces (remember above?). The first level answer to this is that very few modern, popular applications on the cloud rely on processes, and some that do have configuration parameters to use threads instead. For the remaining few (e.g., Apache HTTP server), we’re working on a solution, the explanation of which we’ll leave to a separate article.

Is Unikraft Meant to Replace Containers?

No, not really. In fact, Unikraft uses Docker and Dockerfiles to allow users to specify the applications/languages they’d like to build and run. That is, containers/Docker are an extremely useful tool for development environments and for building images.

Having said that, when it comes to deployment, containers are neither secure nor efficient/lightweight. I won’t go into detail as to the large number of security issues with containers, those have been covered extensively by others (in fact, more often than not when running in the cloud containers are placed inside a VM for isolation purposes).

For deployments we argue that unikernels are the right tech for the job; with Unikraft, you can, in essence, have your cake and eat it too:

Security: strong, hardware-level isolation (they’re virtual machines after all!)

Performance/efficiency: millisecond semantics, higher throughput than Linux, etc.

Usability: a few commands to have a unikernel built and deployed

As the diagram above shows, existing technologies cannot provide all 3 at once.

What About Related Projects?

While providing a comprehensive list of other unikernel projects or projects that aim to provide more efficient cloud deployments is out of scope for this article, here’s a brief list:

Single app/lang projects: Projects like MirageOS (OCaml), GraalVM (Java) or LING (Erlang) can only run a single language; Unikraft has no such restrictions.

Slimming projects, like Docker Slim try to take a Linux-based distro/container and slim it down. Underneath though it’s still Linux running in the image, and there’s only so much slimming you can do (see comparison below between Docker images and Unikraft)

Turning Linux into a unikernel: you can read about why this isn’t the answer here 🙂

Wasm: relies only on language-level isolation (not hardware-level isolation) and cannot run every workload out there. In fact, the best case scenario would be to run a WASM runtime within a unikernel (as done for example here with Unikraft and wasm3). Unikraft has support for Intel’s WAMR and we’ll be adding support for one of the main runtimes soon.

Perf Graphs!

Hopefully I’ve made unikernels enticing enough to take them out for a quick spin! Before finishing, a quick question: how many NGINX VMs (read: unikernels of course! 🙂) can you stuff into a 64-core server? Answer:

Yes, that’s close to 100K, and certainly a far cry from the 10-200 you might see in current production environments.

What about cold boot times? How long would it take to cold boot a virtual machine? Hint: it’s not seconds 🙂:

As shown, Unikraft has cold boot times that are 2 orders of magnitude less than Linux; one could even argue that those boot times are no longer all that cold 🙂. Also note that for Linux we built a customized image to be fair to it, but most standard images would result in longer boot times.

Regarding throughput, remember I mentioned that unikernels tend to be more I/O efficient than general-purpose OSes? Here’s a graph of NGINX on Unikraft vs. Linux:

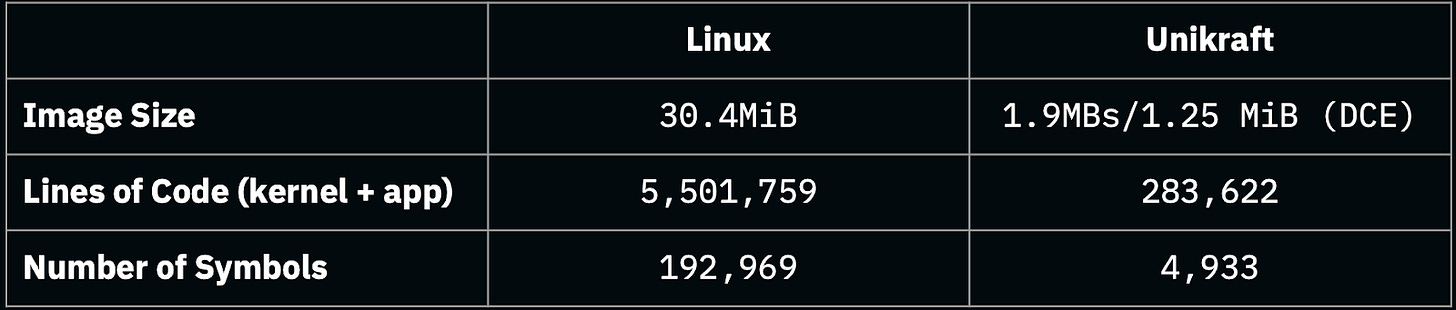

As a final measurement, we looked at image size, number of lines of code and symbols of an NGINX + Unikraft image versus an NGINX + Linux one:

The numbers show the effect of specialition: even though the application is exactly the same in both columns, the resulting image certainly isn’t. To loop back to this article’s title, are we getting cloud deployments wrong? 🙂

Final Word

So come join the unikernel revolution and please do drop me a line, I love feedback of any kind (felipe@unikraft.io, I’m also on LinkedIn and Twitter). And if you have a moment please star us on github and/or join our Unikraft Discord server.

Subscription: If you want to get updates, you can subscribe to the free newsletter:

Mark as not spam: : When you subscribe to the newsletter please do not forget to check your spam / junk folder. Make sure to "mark as not spam" in your email client and move it to your Inbox. Add the publication's Substack email address to your contact list. All posts will be sent from this address: ecosystem4engineering@substack.com.

Thanks for reading Software Engineering Ecosystem! Subscribe for free to receive new posts.

Subscribed

❤️ Share it — The engineering ecosystem newsletter lives thanks to word of mouth. Share the article with someone to whom it might be useful! By forwarding the email or sharing it on social media.

And Scale to 0/1 is amazing - https://packagemain.tech/p/millisecond-scale-to-zero-with-unikernels